There is so much to tell about the Western country in that day that it is hard to know where to start. One thing sets off a hundred others. The problem is to decide which one to tell first.

—JOHN STEINBECK, East of Eden

Chapter 4: Appenders

What is an Appender?

Logback delegates the task of writing a logging event to

components called appenders. Appenders must implement

the ch.qos.logback.core.Appender

interface. The salient methods of this interface are summarized

below:

Most of the methods in the Appender interface are

setters and getters. A notable exception is the

doAppend() method taking an object instance of type

E as its only parameter. The actual type of E

will vary depending on the logback module. Within the

logback-classic module E would be of type ILoggingEvent

and within the logback-access module it would be of type AccessEvent.

The doAppend() method is perhaps the most important in

the logback framework. It is responsible for outputting the logging

events in a suitable format to the appropriate output device.

Appenders are named entities. This ensures that they can be

referenced by name, a quality confirmed to be instrumental in

configuration scripts. The Appender interface extends

the FilterAttachable interface. It follows that one or

more filters can be attached to an appender instance. Filters are

discussed in detail in a subsequent chapter.

Appenders are ultimately responsible for outputting logging

events. However, they may delegate the actual formatting of the

event to a Layout or to an Encoder object.

Each layout/encoder is associated with one and only one appender,

referred to as the owning appender. Some appenders have a built-in

or fixed event format. Consequently, they do not require nor have a

layout/encoder. For example, the SocketAppender simply

serializes logging events before transmitting them over the wire.

AppenderBase

The

ch.qos.logback.core.AppenderBase class is an

abstract class implementing the Appender interface. It

provides basic functionality shared by all appenders, such as

methods for getting or setting their name, their activation status,

their layout and their filters. It is the super-class of all

appenders shipped with logback. Although an abstract class,

AppenderBase actually implements the

doAppend() method in the Append interface.

Perhaps the clearest way to discuss AppenderBase class

is by presenting an excerpt of actual source code.

public synchronized void doAppend(E eventObject) {

// prevent re-entry.

if (guard) {

return;

}

try {

guard = true;

if (!this.started) {

if (statusRepeatCount++ < ALLOWED_REPEATS) {

addStatus(new WarnStatus(

"Attempted to append to non started appender [" + name + "].",this));

}

return;

}

if (getFilterChainDecision(eventObject) == FilterReply.DENY) {

return;

}

// ok, we now invoke the derived class's implementation of append

this.append(eventObject);

} finally {

guard = false;

}

}This implementation of the doAppend() method is

synchronized. It follows that logging to the same appender from

different threads is safe. While a thread, say T, is

executing the doAppend() method, subsequent calls by

other threads are queued until T leaves the

doAppend() method, ensuring T's exclusive

access to the appender.

Since such synchronization is not always appropriate, logback

ships with ch.qos.logback.core.UnsynchronizedAppenderBase

which is very similar to the AppenderBase

class. For the sake of conciseness, we will be discussing

UnsynchronizedAppenderBase in the remainder of this document.

The first thing the doAppend() method does is to

check whether the guard is set to true. If it is, it immediately

exits. If the guard is not set, it is set to true at the next

statement. The guard ensures that the doAppend() method

will not recursively call itself. Just imagine that a component,

called somewhere beyond the append() method, wants to

log something. Its call could be directed to the very same appender

that just called it resulting in an infinite loop and a stack

overflow.

In the following statement we check whether the

started field is true. If it is not,

doAppend() will send a warning message and return. In

other words, once an appender is closed, it is impossible to write

to it. Appender objects implement the

LifeCycle interface, which implies that they implement

start(), stop() and

isStarted() methods. After setting all the properties of

an appender, Joran, logback's configuration framework, calls the

start() method to signal the appender to activate its

properties. Depending on its kind, an appender may fail to start if

certain properties are missing or because of interference between

various properties. For example, given that file creation depends on

truncation mode, FileAppender cannot act on the value

of its File option until the value of the Append option

is also known with certainty. The explicit activation step ensures

that an appender acts on its properties after their values

become known.

If the appender could not be started or if it has been stopped, a

warning message will be issued through logback's internal status

management system. After several attempts, in order to avoid

flooding the internal status system with copies of the same warning

message, the doAppend() method will stop issuing these

warnings.

The next if statement checks the result of the

attached filters. Depending on the decision resulting from the

filter chain, events can be denied or explicitly accepted. In

the absence of a decision by the filter chain, events are accepted

by default.

The doAppend() method then invokes the derived

classes' implementation of the append() method. This

method does the actual work of appending the event to the

appropriate device.

Finally, the guard is released so as to allow a subsequent

invocation of the doAppend() method.

For the remainder of this manual, we reserve the term "option" or alternatively "property" for any attribute that is inferred dynamically using JavaBeans introspection through setter and getter methods.

Logback-core

Logback-core lays the foundation upon which the other logback modules are built. In general, the components in logback-core require some, albeit minimal, customization. However, in the next few sections, we describe several appenders which are ready for use out of the box.

OutputStreamAppender

OutputStreamAppender

appends events to a java.io.OutputStream. This class

provides basic services that other appenders build upon. Users do

not usually instantiate OutputStreamAppender objects

directly, since in general the java.io.OutputStream

type cannot be conveniently mapped to a string, as there is no way

to specify the target OutputStream object in a

configuration script. Simply put, you cannot configure a

OutputStreamAppender from a configuration file.

However, this does not mean that OutputStreamAppender

lacks configurable properties. These properties are described next.

| Property Name | Type | Description |

|---|---|---|

| encoder | Encoder |

Determines the manner in which an event is written to the

underlying OutputStreamAppender. Encoders are

described in a dedicated chapter.

|

| immediateFlush | boolean |

The default value for immediateFlush is 'true'. Immediate flushing of the output stream ensures that logging events are immediately written out and will not be lost in case your application exits without properly closing appenders. On the other hand, setting this property to 'false' is likely to quadruple (your mileage may vary) logging throughput. Again, if immediateFlush is set to 'false' and if appenders are not closed properly when your application exits, then logging events not yet written to disk may be lost. |

The OutputStreamAppender is the super-class of three other

appenders, namely ConsoleAppender,

FileAppender which in turn is the super class of

RollingFileAppender. The next figure illustrates the

class diagram for OutputStreamAppender and its subclasses.

ConsoleAppender

The

ConsoleAppender, as the name indicates,

appends on the console, or more precisely on

System.out or System.err, the former being

the default target. ConsoleAppender formats

events with the help of an encoder specified by the

user. Encoders will be discussed in a subsequent chapter. Both

System.out and System.err are of type

java.io.PrintStream. Consequently, they are

wrapped inside an OutputStreamWriter which

buffers I/O operations.

WARNING Please note the console is comparatively slow, even very slow. You should avoid logging to the console in production, especially in high volume systems.

| Property Name | Type | Description |

|---|---|---|

| encoder |

Encoder

|

See OutputStreamAppender properties. |

| target | String |

One of the String values System.out or System.err. The default target is System.out. |

| withJansi | boolean |

By the default withJansi

property is set to |

Here is a sample configuration that uses ConsoleAppender.

Example: ConsoleAppender configuration (logback-examples/src/main/resources/chapters/appenders/conf/logback-Console.xml)

<configuration>

<appender name="STDOUT" class="ch.qos.logback.core.ConsoleAppender">

<!-- encoders are assigned the type

ch.qos.logback.classic.encoder.PatternLayoutEncoder by default -->

<encoder>

<pattern>%-4relative [%thread] %-5level %logger{35} -%kvp- %msg %n</pattern>

</encoder>

</appender>

<root level="DEBUG">

<appender-ref ref="STDOUT" />

</root>

</configuration>

Requires a server call.

Requires a server call.

After you have set your current path to the logback-examples directory and set up your class path, you can give the above configuration file a whirl by issuing the following command:

java chapters.appenders.ConfigurationTester src/main/java/chapters/appenders/conf/logback-Console.xml

FileAppender

The FileAppender,

a subclass of OutputStreamAppender, appends log events into

a file. The target file is specified by the File option. If the file already exists, it

is either appended to, or truncated depending on the value of the

append property.

| Property Name | Type | Description |

|---|---|---|

| append | boolean |

If true, events are appended at the end of an existing file. Otherwise, if append is false, any existing file is truncated. The append option is set to true by default. |

| encoder |

Encoder

|

See OutputStreamAppender properties. |

| file | String |

The name of the file to write to. If the file does not

exist, it is created. On the MS Windows platform users

frequently forget to escape backslashes. For example, the

value c:\temp\test.log is not likely to be interpreted

properly as '\t' is an escape sequence interpreted as

a single tab character (\u0009). Correct values can

be specified as c:/temp/test.log or alternatively as

c:\\temp\\test.log. The File option has no default value.

If the parent directory of the file does not exist,

|

| bufferSize | FileSize |

The bufferSize option set the size of the output buffer in case immediateFlush option is set to false. Default value for bufferSize is 8192. The value 256KKB seems to sufficient even in cases of very heavy and persistent loads. Options in defined in units of "FileSize" can be specified in bytes, kilobytes, megabytes or gigabytes by suffixing a numeric value with KB, MB and respectively GB. For example, 5000000, 5000KB, 5MB and 2GB are all valid values, with the first three being equivalent. |

| prudent | boolean |

In prudent mode, FileAppender will safely

write to the specified file, even in the presence of other

FileAppender instances running in different

JVMs, potentially running on different hosts. The default

value for prudent mode is false.

Prudent mode can be used in conjunction with

Prudent mode implies that append property is automatically set to true. Prudent more relies on exclusive file locks. Experiments show that file locks approximately triple (x3) the cost of writing a logging event. On an "average" PC writing to a file located on a local hard disk, when prudent mode is off, it takes about 10 microseconds to write a single logging event. When prudent mode is on, it takes approximately 30 microseconds to output a single logging event. This translates to logging throughput of 100'000 events per second when prudent mode is off and approximately 33'000 events per second in prudent mode. Prudent mode effectively serializes I/O operations between all JVMs writing to the same file. Thus, as the number of JVMs competing to access a file increases so will the delay incurred by each I/O operation. As long as the total number of I/O operations is in the order of 20 log requests per second, the impact on performance should be negligible. Applications generating 100 or more I/O operations per second can see an impact on performance and should avoid using prudent mode. Networked file locks When the log file is located on a networked file system, the cost of prudent mode is even greater. Just as importantly, file locks over a networked file system can be sometimes strongly biased such that the process currently owning the lock immediately re-obtains the lock upon its release. Thus, while one process hogs the lock for the log file, other processes starve waiting for the lock to the point of appearing deadlocked. The impact of prudent mode is highly dependent on network speed as well as the OS implementation details. We provide a very small application called FileLockSimulator which can help you simulate the behavior of prudent mode in your environment. |

Immediate Flush By default,

each log event is immediately flushed to the underlying output

stream. This default approach is safer in the sense that logging

events are not lost in case your application exits without properly

closing appenders. However, for significantly increased logging

throughput, you may want to set the immediateFlush property to

false.

Below is an example of a configuration file for

FileAppender:

Example: FileAppender configuration (logback-examples/src/main/resources/chapters/appenders/conf/logback-fileAppender.xml)

<configuration>

<appender name="FILE" class="ch.qos.logback.core.FileAppender">

<file>testFile.log</file>

<append>true</append>

<!-- set immediateFlush to false for much higher logging throughput -->

<immediateFlush>true</immediateFlush>

<!-- encoders are assigned the type

ch.qos.logback.classic.encoder.PatternLayoutEncoder by default -->

<encoder>

<pattern>%-4relative [%thread] %-5level %logger{35} -%kvp- %msg%n</pattern>

</encoder>

</appender>

<root level="DEBUG">

<appender-ref ref="FILE" />

</root>

</configuration>Requires a server call.

Requires a server call.

After changing the current directory to logback-examples, run this example by launching the following command:

java chapters.appenders.ConfigurationTester src/main/java/chapters/appenders/conf/logback-fileAppender.xml

Uniquely named files (by timestamp)

During the application development phase or in the case of

short-lived applications, e.g. batch applications, it is desirable

to create a new log file at each new application launch. This is

fairly easy to do with the help of the <timestamp>

element. Here's an example.

Example: Uniquely named FileAppender configuration by timestamp (logback-examples/src/main/resources/chapters/appenders/conf/logback-timestamp.xml)

<configuration>

<!-- Insert the current time formatted as "yyyyMMdd'T'HHmmss" under

the key "bySecond" into the logger context. This value will be

available to all subsequent configuration elements. -->

<timestamp key="bySecond" datePattern="yyyyMMdd'T'HHmmss"/>

<appender name="FILE" class="ch.qos.logback.core.FileAppender">

<!-- use the previously created timestamp to create a uniquely

named log file -->

<file>log-${bySecond}.txt</file>

<encoder>

<pattern>%logger{35} -%kvp- %msg%n</pattern>

</encoder>

</appender>

<root level="DEBUG">

<appender-ref ref="FILE" />

</root>

</configuration>Requires a server call.

Requires a server call.

The timestamp element takes two mandatory attributes key and datePattern

and an optional timeReference

attribute. The key attribute is the name

of the key under which the timestamp will be available to

subsequent configuration elements as a

variable. The datePattern attribute

denotes the date pattern used to convert the current time (at which

the configuration file is parsed) into a string. The date pattern

should follow the conventions defined in SimpleDateFormat. The

timeReference attribute denotes the time

reference for the time stamp. The default is the

interpretation/parsing time of the configuration file, i.e. the

current time. However, under certain circumstances it might be

useful to use the context birth time as time reference. This can be

accomplished by setting the timeReference

attribute to "contextBirth".

Experiment with the <timestamp> element by

running the command:

java chapters.appenders.ConfigurationTester src/main/resources/chapters/appenders/conf/logback-timestamp.xmlTo use the logger context birthdate as time reference, you would set the timeReference attribute to "contextBirth" as shown below.

Example: Timestamp using context birthdate as time reference (logback-examples/src/main/resources/chapters/appenders/conf/logback-timestamp-contextBirth.xml)

<configuration>

<timestamp key="bySecond" datePattern="yyyyMMdd'T'HHmmss"

timeReference="contextBirth"/>

...

</configuration>RollingFileAppender

RollingFileAppender

extends FileAppender with the capability to rollover log

files. For example, RollingFileAppender can log to a

file named log.txt file and, once a certain condition is

met, change its logging target to another file.

There are two important subcomponents that interact with

RollingFileAppender. The first

RollingFileAppender sub-component, namely

RollingPolicy, (see

below) is responsible for undertaking the actions required for

a rollover. A second subcomponent of

RollingFileAppender, namely

TriggeringPolicy, (see

below) will determine if and exactly when rollover

occurs. Thus, RollingPolicy is responsible for the

what and TriggeringPolicy is responsible for

the when.

To be of any use, a RollingFileAppender must have

both a RollingPolicy and a

TriggeringPolicy set up. However, if its

RollingPolicy also implements the

TriggeringPolicy interface, then only the former needs

to be specified explicitly.

Here are the available properties for RollingFileAppender:

| Property Name | Type | Description |

|---|---|---|

| file | String |

See FileAppender properties. Note

that file can be null in

which case the output is written only to the target specified

by the RollingPolicy. |

| append | boolean |

See FileAppender properties. |

| encoder |

Encoder

|

See OutputStreamAppender properties. |

| rollingPolicy | RollingPolicy |

This option is the component that will dictate

RollingFileAppender's behavior when rollover

occurs. See more information below.

|

| triggeringPolicy | TriggeringPolicy |

This option is the component that will tell

RollingFileAppender when to activate the rollover

procedure. See more information below.

|

| prudent | boolean |

FixedWindowRollingPolicy

is not supported in prudent mode.

FileAppender.

|

Overview of rolling policies

RollingPolicy

is responsible for the rollover procedure which involves file

moving and renaming.

The RollingPolicy interface is presented below:

package ch.qos.logback.core.rolling;

import ch.qos.logback.core.FileAppender;

import ch.qos.logback.core.spi.LifeCycle;

public interface RollingPolicy extends LifeCycle {

public void rollover() throws RolloverFailure;

public String getActiveFileName();

public CompressionMode getCompressionMode();

public void setParent(FileAppender appender);

}The rollover method accomplishes the work involved

in archiving the current log file. The

getActiveFileName() method is called to compute the

file name of the current log file (where live logs are written

to). As indicated by getCompressionMode method a

RollingPolicy is also responsible for determining the compression

mode. Lastly, a RollingPolicy is given a reference to

its parent via the setParent method.

TimeBasedRollingPolicy

TimeBasedRollingPolicy is possibly the most

popular rolling policy. It defines a rollover policy based on time,

for example by day or by month.

TimeBasedRollingPolicy assumes the responsibility for

rollover as well as for the triggering of said rollover. Indeed,

TimeBasedTriggeringPolicy implements both

RollingPolicy and TriggeringPolicy

interfaces.

TimeBasedRollingPolicy's configuration takes one

mandatory fileNamePattern property and

several optional properties.

| Property Name | Type | Description |

|---|---|---|

| fileNamePattern | String |

The mandatory fileNamePattern

property defines the name of the rolled-over (archived) log

files. Its value should consist of the name of the file, plus

a suitably placed %d conversion specifier. The

%d conversion specifier may contain a date-and-time

pattern as specified by the

java.text.SimpleDateFormat class. If the

date-and-time pattern is omitted, then the default pattern

yyyy-MM-dd is assumed. The rollover period is

inferred from the value of fileNamePattern.

Note that the file property in

However, if you choose to omit the file property, then the active file will be computed anew for each period based on the value of fileNamePattern. In this configuration no roll over occurs, unless file compression is specified. The examples below should clarify this point. The date-and-time pattern, as found within the accolades of %d{} follow java.text.SimpleDateFormat conventions. The forward slash '/' or backward slash '\' characters anywhere within the fileNamePattern property or within the date-and-time pattern will be interpreted as directory separators. Multiple %d specifiersIt is possible to specify multiple %d specifiers but only one of which can be primary, i.e. used to infer the rollover period. All other tokens must be marked as auxiliary by passing the 'aux' parameter (see examples below). Multiple %d specifiers allow you to organize archive files in a folder structure different than that of the roll-over period. For example, the file name pattern shown below organizes log folders by year and month but roll-over log files every day at midnight. /var/log/%d{yyyy/MM, aux}/myapplication.%d{yyyy-MM-dd}.log

TimeZoneUnder certain circumstances, you might wish to roll-over log files according to a clock in a timezone different than that of the host. It is possible to pass a timezone argument following the date-and-time pattern within the %d conversion specifier. For example: aFolder/test.%d{yyyy-MM-dd-HH, UTC}.log

If the specified timezone identifier is unknown or misspelled, the GMT timezone is assumed as dictated by the TimeZone.getTimeZone(String) method specification. |

| maxHistory | int | The optional maxHistory property controls the maximum number of archive files to keep, asynchronously deleting older files. For example, if you specify monthly rollover, and set maxHistory to 6, then 6 months worth of archives files will be kept with files older than 6 months deleted. Note as old archived log files are removed, any folders which were created for the purpose of log file archiving will be removed as appropriate. Setting maxHistory to zero disables archive removal. By default, maxHistory is set to zero, i.e. by default there is no archive removal. |

| totalSizeCap | int | The optional totalSizeCap property controls the total size of all archive files. Oldest archives are deleted asynchronously when the total size cap is exceeded. The totalSizeCap property requires maxHistory property to be set as well. Moreover, the "max history" restriction is always applied first and the "total size cap" restriction applied second. In other words, if enabled, both restrictions are applied, albeit sequentially. The totalSizeCap property can be specified in units of bytes, kilobytes, megabytes or gigabytes by suffixing a numeric value with KB, MB and respectively GB. For example, 5000000, 5000KB, 5MB and 2GB are all valid values, with the first three being equivalent. A numerical value with no suffix is taken to be in units of bytes. By default, totalSizeCap is set to zero, meaning that there is no total size cap. |

| cleanHistoryOnStart | boolean |

If set to true, archive removal will be executed on appender start up. By default, this property is set to false. Archive removal is normally performed during roll over. However, some applications may not live long enough for roll over to be triggered. It follows that for such short-lived applications archive removal may never get a chance to execute. By setting cleanHistoryOnStart to true, archive removal is performed at appender start up. |

Here are a few fileNamePattern values with an

explanation of their effects.

| fileNamePattern | Rollover schedule | Example |

|---|---|---|

| /wombat/foo.%d | Daily rollover (at midnight). Due to the omission of the optional time and date pattern for the %d token specifier, the default pattern of yyyy-MM-dd is assumed, which corresponds to daily rollover. |

file property not set: During November 23rd, 2006, logging output will go to the file /wombat/foo.2006-11-23. At midnight and for the rest of the 24th, logging output will be directed to /wombat/foo.2006-11-24. file property set to /wombat/foo.txt: During November 23rd, 2006, logging output will go to the file /wombat/foo.txt. At midnight, foo.txt will be renamed as /wombat/foo.2006-11-23. A new /wombat/foo.txt file will be created and for the rest of November 24th logging output will be directed to foo.txt. |

| /wombat/%d{yyyy/MM}/foo.txt | Rollover at the beginning of each month. |

file property not set: During the month of October 2006, logging output will go to /wombat/2006/10/foo.txt. After midnight of October 31st and for the rest of November, logging output will be directed to /wombat/2006/11/foo.txt. file property set to /wombat/foo.txt: The active log file will always be /wombat/foo.txt. During the month of October 2006, logging output will go to /wombat/foo.txt. At midnight of October 31st, /wombat/foo.txt will be renamed as /wombat/2006/10/foo.txt. A new /wombat/foo.txt file will be created where logging output will go for the rest of November. At midnight of November 30th, /wombat/foo.txt will be renamed as /wombat/2006/11/foo.txt and so on. |

| /wombat/foo.%d{yyyy-ww}.log | Rollover at the first day of each week. Note that the first day of the week depends on the locale. | Similar to previous cases, except that rollover will occur at the beginning of every new week. |

| /wombat/foo%d{yyyy-MM-dd_HH}.log | Rollover at the top of each hour. | Similar to previous cases, except that rollover will occur at the top of every hour. |

| /wombat/foo%d{yyyy-MM-dd_HH-mm}.log | Rollover at the beginning of every minute. | Similar to previous cases, except that rollover will occur at the beginning of every minute. |

| /wombat/foo%d{yyyy-MM-dd_HH-mm, UTC}.log | Rollover at the beginning of every minute. | Similar to previous cases, except that file names will be expressed in UTC. |

| /foo/%d{yyyy-MM,aux}/%d.log | Rollover daily. Archives located under a folder containing year and month. | In this example, the first %d token is marked as auxiliary. The second %d token, with time and date pattern omitted, is then assumed to be primary. Thus, rollover will occur daily (default for %d) and the folder name will depend on the year and month. For example, during the month of November 2006, archived files will all placed under the /foo/2006-11/ folder, e.g /foo/2006-11/2006-11-14.log. |

Any forward or backward slash characters are interpreted as folder (directory) separators. Any required folder will be created as necessary. You can thus easily place your log files in separate folders.

Automatic file compression

TimeBasedRollingPolicy supports automatic file

compression. This feature is enabled if the value of the fileNamePattern option ends with .gz

.zip or .xz. Note that xz compression requires

Tukaani project's XZ library for

Java. In case XZ compression is requested but the XZ

library is missing, then logback will substitute GZ compression as

a fallback.

| fileNamePattern | Rollover schedule | Example |

|---|---|---|

| /wombat/foo.%d.gz | Daily rollover (at midnight) with automatic GZIP compression of the archived files. |

file property not set: During November 23rd, 2009, logging output will go to the file /wombat/foo.2009-11-23. However, at midnight that file will be compressed to become /wombat/foo.2009-11-23.gz. For the 24th of November, logging output will be directed to /wombat/folder/foo.2009-11-24 until it's rolled over at the beginning of the next day. file property set to /wombat/foo.txt: During November 23rd, 2009, logging output will go to the file /wombat/foo.txt. At midnight that file will be compressed and renamed as /wombat/foo.2009-11-23.gz. A new /wombat/foo.txt file will be created where logging output will go for the rest of November 24th. At midnight November 24th, /wombat/foo.txt will be compressed and renamed as /wombat/foo.2009-11-24.gz and so on. |

The fileNamePattern serves a dual purpose. First, by studying the pattern, logback computes the requested rollover periodicity. Second, it computes each archived file's name. Note that it is possible for two different patterns to specify the same periodicity. The patterns yyyy-MM and yyyy@MM both specify monthly rollover, although the resulting archive files will carry different names.

By setting the file property you can decouple the location of the active log file and the location of the archived log files. The logging output will be targeted into the file specified by the file property. It follows that the name of the active log file will not change over time. However, if you choose to omit the file property, then the active file will be computed anew for each period based on the value of fileNamePattern. By leaving the file option unset you can avoid file renaming errors which occur while there exist external file handles referencing log files during roll over.

The maxHistory property controls the maximum number of archive files to keep, deleting older files. For example, if you specify monthly rollover, and set maxHistory to 6, then 6 months worth of archives files will be kept with files older than 6 months deleted. Note as old archived log files are removed, any folders which were created for the purpose of log file archiving will be removed as appropriate.

For various technical reasons, rollovers are not clock-driven but depend on the arrival of logging events. For example, on 8th of March 2002, assuming the fileNamePattern is set to yyyy-MM-dd (daily rollover), the arrival of the first event after midnight will trigger a rollover. If there are no logging events during, say 23 minutes and 47 seconds after midnight, then rollover will actually occur at 00:23'47 AM on March 9th and not at 0:00 AM. Thus, depending on the arrival rate of events, rollovers might be triggered with some latency. However, regardless of the delay, the rollover algorithm is known to be correct, in the sense that all logging events generated during a certain period will be output in the correct file delimiting that period.

Here is a sample configuration for

RollingFileAppender in conjunction with a

TimeBasedRollingPolicy.

Example: Sample configuration of a

RollingFileAppender using a

TimeBasedRollingPolicy

(logback-examples/src/main/resources/chapters/appenders/conf/logback-RollingTimeBased.xml)

<configuration>

<appender name="FILE" class="ch.qos.logback.core.rolling.RollingFileAppender">

<file>logFile.log</file>

<rollingPolicy class="ch.qos.logback.core.rolling.TimeBasedRollingPolicy">

<!-- daily rollover -->

<fileNamePattern>logFile.%d{yyyy-MM-dd}.log</fileNamePattern>

<!-- keep 30 days' worth of history capped at 3GB total size -->

<maxHistory>30</maxHistory>

<totalSizeCap>3GB</totalSizeCap>

</rollingPolicy>

<encoder>

<pattern>%-4relative [%thread] %-5level %logger{35} -%kvp- %msg%n</pattern>

</encoder>

</appender>

<root level="DEBUG">

<appender-ref ref="FILE" />

</root>

</configuration>Requires a server call.

Requires a server call.

The next configuration sample illustrates the use of

RollingFileAppender associated with

TimeBasedRollingPolicy in prudent

mode.

Example: Sample configuration of a

RollingFileAppender using a

TimeBasedRollingPolicy

(logback-examples/src/main/resources/chapters/appenders/conf/logback-PrudentTimeBasedRolling.xml)

<configuration>

<appender name="FILE" class="ch.qos.logback.core.rolling.RollingFileAppender">

<!-- Support multiple-JVM writing to the same log file -->

<prudent>true</prudent>

<rollingPolicy class="ch.qos.logback.core.rolling.TimeBasedRollingPolicy">

<fileNamePattern>logFile.%d{yyyy-MM-dd}.log</fileNamePattern>

<maxHistory>30</maxHistory>

<totalSizeCap>3GB</totalSizeCap>

</rollingPolicy>

<encoder>

<pattern>%-4relative [%thread] %-5level %logger{35} -%kvp -%msg%n</pattern>

</encoder>

</appender>

<root level="DEBUG">

<appender-ref ref="FILE" />

</root>

</configuration>Requires a server call.

Requires a server call.

Size and time based rolling policy

Sometimes you may wish to archive files essentially by date but

at the same time limit the size of each log file, in particular if

post-processing tools impose size limits on the log files. In

order to address this requirement, logback ships with

SizeAndTimeBasedRollingPolicy.

Note that TimeBasedRollingPolicy already allows

limiting the combined size of archived log files. If you only wish

to limit the combined size of log archives, then

TimeBasedRollingPolicy described above and setting

the totalSizeCap property should be amply

sufficient. Moreover, given that file renaming is a relatively

slow process and is frought with problems, we discourage the use

of SizeAndTimeBasedRollingPolicy unless you have a

real-world use case.

The roll over based on size relies on the "%i" conversion token in addition to "%d". Both the %i and %d tokens are mandatory. Each time the current log file reaches maxFileSize before the current time period ends, it will be archived with an increasing index, starting at 0.

The table below lists the properties applicable for

SizeAndTimeBasedRollingPolicy. Note these properties

complement those applicable for TimeBasedRollingPolicy.

| Property Name | Type | Description |

|---|---|---|

| maxFileSize | FileSize |

Each time the current log file reaches maxFileSize before the current time period ends, it will be archived with an increasing index, starting at 0. Options in defined in units of "FileSize" can be specified in bytes, kilobytes, megabytes or gigabytes by suffixing a numeric value with KB, MB and respectively GB. For example, 5000000, 5000KB, 5MB and 2GB are all valid values, with the first three being equivalent. |

| checkIncrement | Duration |

since 1.5.8 The checkIncrement property is no longer needed as logback now counts the number of bytes written to file. |

Here is a sample configuration file demonstrating time and size based log file archiving.

Example: Sample configuration for

SizeAndTimeBasedRollingPolicy

(logback-examples/src/main/resources/chapters/appenders/conf/logback-sizeAndTime.xml)

<configuration>

<appender name="ROLLING" class="ch.qos.logback.core.rolling.RollingFileAppender">

<file>mylog.txt</file>

<rollingPolicy class="ch.qos.logback.core.rolling.SizeAndTimeBasedRollingPolicy">

<!-- rollover daily -->

<fileNamePattern>mylog-%d{yyyy-MM-dd}.%i.txt</fileNamePattern>

<!-- each file should be at most 100MB, keep 60 days worth of history, but at most 20GB -->

<maxFileSize>100MB</maxFileSize>

<maxHistory>60</maxHistory>

<totalSizeCap>20GB</totalSizeCap>

</rollingPolicy>

<encoder>

<pattern>%msg%n</pattern>

</encoder>

</appender>

<root level="DEBUG">

<appender-ref ref="ROLLING" />

</root>

</configuration>Requires a server call.

Requires a server call.

Note the "%i" conversion token in addition to "%d". Both the %i and %d tokens are mandatory. Each time the current log file reaches maxFileSize before the current time period ends, it will be archived with an increasing index, starting at 0.

Size and time based archiving supports deletion of old archive files. You need to specify the number of periods to preserve with the maxHistory property. When your application is stopped and restarted, logging will continue at the correct location, i.e. at the largest index number for the current period.

In versions prior to 1.1.7, this document mentioned a component

called SizeAndTimeBasedFNATP. However, given that

SizeAndTimeBasedRollingPolicy offers a simpler configuration

structure, we no longer document

SizeAndTimeBasedFNATP. Moreover, in logback version

1.5.8,

SizeAndTimeBasedFNATP was renamed as

SizeAndTimeBasedFileNamingAndTriggeringPolicy. Thus, earlier

configuration files using SizeAndTimeBasedFNATP will

no longer work.

FixedWindowRollingPolicy

Given that file renaming is a relatively slow process and is

frought with problems, we consider

FixedWindowRollingPolicy as a deprecated policy and do

not recommend its use.

When rolling over,

FixedWindowRollingPolicy renames files according

to a fixed window algorithm as described below.

The fileNamePattern option represents the file name pattern for the archived (rolled over) log files. This option is required and must include an integer token %i somewhere within the pattern.

Here are the available properties for

FixedWindowRollingPolicy

| Property Name | Type | Description |

|---|---|---|

| minIndex | int |

This option represents the lower bound for the window's index. |

| maxIndex | int |

This option represents the upper bound for the window's index. |

| fileNamePattern | String |

This option represents the pattern that will be followed

by the For example, using MyLogFile%i.log associated with minimum and maximum values of 1 and 3 will produce archive files named MyLogFile1.log, MyLogFile2.log and MyLogFile3.log. Note that file compression is also specified via this property. For example, fileNamePattern set to MyLogFile%i.log.zip means that archived files must be compressed using the zip format; gz format is also supported. |

Given that the fixed window rolling policy requires as many file renaming operations as the window size, large window sizes are strongly discouraged. When large values are specified by the user, the current implementation will automatically reduce the window size to 20.

Let us go over a more concrete example of the fixed window rollover policy. Suppose that minIndex is set to 1, maxIndex set to 3, fileNamePattern property set to foo%i.log, and that file property is set to foo.log.

| Number of rollovers | Active output target | Archived log files | Description |

|---|---|---|---|

| 0 | foo.log | - | No rollover has happened yet, logback logs into the initial file. |

| 1 | foo.log | foo1.log | First rollover. foo.log is renamed as foo1.log. A new foo.log file is created and becomes the active output target. |

| 2 | foo.log | foo1.log, foo2.log | Second rollover. foo1.log is renamed as foo2.log. foo.log is renamed as foo1.log. A new foo.log file is created and becomes the active output target. |

| 3 | foo.log | foo1.log, foo2.log, foo3.log | Third rollover. foo2.log is renamed as foo3.log. foo1.log is renamed as foo2.log. foo.log is renamed as foo1.log. A new foo.log file is created and becomes the active output target. |

| 4 | foo.log | foo1.log, foo2.log, foo3.log | In this and subsequent rounds, the rollover begins by deleting foo3.log. Other files are renamed by incrementing their index as shown in previous steps. In this and subsequent rollovers, there will be three archive logs and one active log file. |

The configuration file below gives an example of configuring

RollingFileAppender and

FixedWindowRollingPolicy. Note that the File option is mandatory even if it contains

some of the same information as conveyed with the fileNamePattern option.

Example: Sample configuration of a RollingFileAppender using a

FixedWindowRollingPolicy (logback-examples/src/main/resources/chapters/appenders/conf/logback-RollingFixedWindow.xml)

<configuration>

<appender name="FILE" class="ch.qos.logback.core.rolling.RollingFileAppender">

<file>test.log</file>

<rollingPolicy class="ch.qos.logback.core.rolling.FixedWindowRollingPolicy">

<fileNamePattern>tests.%i.log.zip</fileNamePattern>

<minIndex>1</minIndex>

<maxIndex>3</maxIndex>

</rollingPolicy>

<triggeringPolicy class="ch.qos.logback.core.rolling.SizeBasedTriggeringPolicy">

<maxFileSize>5MB</maxFileSize>

</triggeringPolicy>

<encoder>

<pattern>%-4relative [%thread] %-5level %logger{35} -%kvp -%msg%n</pattern>

</encoder>

</appender>

<root level="DEBUG">

<appender-ref ref="FILE" />

</root>

</configuration>Requires a server call.

Requires a server call.

Overview of triggering policies

TriggeringPolicy

implementations are responsible for instructing the

RollingFileAppender when to rollover.

The TriggeringPolicy interface contains only one

method.

package ch.qos.logback.core.rolling;

import java.io.File;

import ch.qos.logback.core.spi.LifeCycle;

public interface TriggeringPolicy<E> extends LifeCycle {

public boolean isTriggeringEvent(final File activeFile, final <E> event);

}The isTriggeringEvent() method takes as parameters

the active file and the logging event currently being

processed. The concrete implementation determines whether the

rollover should occur or not, based on these parameters.

The most widely-used triggering policy, namely

TimeBasedRollingPolicy which also doubles as a

rolling policy, was already discussed earlier along with

other rolling policies.

SizeBasedTriggeringPolicy

SizeBasedTriggeringPolicy looks at the size of the

currently active file. If it grows larger than the specified size,

it will signal the owning RollingFileAppender to

trigger the rollover of the existing active file.

SizeBasedTriggeringPolicy accepts only one

parameter, namely maxFileSize, with a

default value of 10 MB.

The maxFileSize option can be specified in bytes, kilobytes, megabytes or gigabytes by suffixing a numeric value with KB, MB and respectively GB. For example, 5000000, 5000KB, 5MB and 2GB are all valid values, with the first three being equivalent.

Here is a sample configuration with a

RollingFileAppender in conjunction with

SizeBasedTriggeringPolicy triggering rollover when

the log file reaches 5MB in size.

Example: Sample configuration of a

RollingFileAppender using a

SizeBasedTriggeringPolicy

(logback-examples/src/main/resources/chapters/appenders/conf/logback-RollingSizeBased.xml)

<configuration>

<appender name="FILE" class="ch.qos.logback.core.rolling.RollingFileAppender">

<file>test.log</file>

<rollingPolicy class="ch.qos.logback.core.rolling.FixedWindowRollingPolicy">

<fileNamePattern>test.%i.log.zip</fileNamePattern>

<minIndex>1</minIndex>

<maxIndex>3</maxIndex>

</rollingPolicy>

<triggeringPolicy class="ch.qos.logback.core.rolling.SizeBasedTriggeringPolicy">

<maxFileSize>5MB</maxFileSize>

</triggeringPolicy>

<encoder>

<pattern>%-4relative [%thread] %-5level %logger{35} -%kvp -%msg%n</pattern>

</encoder>

</appender>

<root level="DEBUG">

<appender-ref ref="FILE" />

</root>

</configuration>Requires a server call.

Requires a server call.

Logback Classic

While logging events are generic in logback-core, within

logback-classic they are always instances of

ILoggingEvent. Logback-classic is nothing more than a

specialized processing pipeline handling instances of

ILoggingEvent.

SocketAppender and SSLSocketAppender

The appenders covered thus far are only able to log to local

resources. In contrast, the

SocketAppender is designed to log to a remote

entity by transmitting serialized ILoggingEvent

instances over the wire. When using SocketAppender

logging events on the wire are sent in the clear. However, when

using

SSLSocketAppender, logging events are delivered

over a secure channel.

The actual type of the serialized event is LoggingEventVO

which implements the ILoggingEvent

interface. Nevertheless, remote logging is non-intrusive as far as

the logging event is concerned. On the receiving end after

deserialization, the event can be logged as if it were generated

locally. Multiple SocketAppender instances running on

different machines can direct their logging output to a central

log server whose format is fixed. SocketAppender

does not take an associated layout because it sends serialized

events to a remote server. SocketAppender operates

above the Transmission Control Protocol (TCP) layer which

provides a reliable, sequenced, flow-controlled end-to-end octet

stream. Consequently, if the remote server is reachable, then log

events will eventually arrive there. Otherwise, if the remote

server is down or unreachable, the logging events will simply be

dropped. If and when the server comes back up, then event

transmission will be resumed transparently. This transparent

reconnection is performed by a connector thread which periodically

attempts to connect to the server.

Logging events are automatically buffered by the native TCP implementation. This means that if the link to server is slow but still faster than the rate of event production by the client, the client will not be affected by the slow network connection. However, if the network connection is slower than the rate of event production, then the client can only progress at the network rate. In particular, in the extreme case where the network link to the server is down, the client will be eventually blocked. Alternatively, if the network link is up, but the server is down, the client will not be blocked, although the log events will be lost due to server unavailability.

Even if a SocketAppender is no longer attached to

any logger, it will not be garbage collected in the presence of a

connector thread. A connector thread exists only if the

connection to the server is down. To avoid this garbage

collection problem, you should close the

SocketAppender explicitly. Long-lived applications

which create/destroy many SocketAppender instances

should be aware of this garbage collection problem. Most other

applications can safely ignore it. If the JVM hosting the

SocketAppender exits before the

SocketAppender is closed, either explicitly or

subsequent to garbage collection, then there might be

untransmitted data in the pipe which may be lost. This is a common

problem on Windows based systems. To avoid lost data, it is

usually sufficient to close() the

SocketAppender either explicitly or by calling the

LoggerContext's stop()

method before exiting the application.

The remote server is identified by the remoteHost and port properties.

SocketAppender properties are listed in the following

table. SSLSocketAppender supports many additional

configuration properties, which are detailed in the section

entitled Using SSL.

| Property Name | Type | Description |

|---|---|---|

| includeCallerData | boolean |

The includeCallerData option takes a boolean value. If true, the caller data will be available to the remote host. By default no caller data is sent to the server. |

| port | int |

The port number of the remote server. |

| reconnectionDelay | Duration |

The reconnectionDelay option takes a duration string, such "10 seconds" representing the time to wait between each failed connection attempt to the server. The default value of this option is 30 seconds. Setting this option to zero turns off reconnection capability. Note that in case of successful connection to the server, there will be no connector thread present. |

| queueSize | int |

The queueSize property takes an integer (greater than zero) representing the number of logging events to retain for delivery to the remote receiver. When the queue size is one, event delivery to the remote receiver is synchronous. When the queue size is greater than one, new events are enqueued, assuming that there is space available in the queue. Using a queue length greater than one can improve performance by eliminating delays caused by transient network delays. See also the eventDelayLimit property. |

| eventDelayLimit | Duration |

The eventDelayLimit option takes a duration string, such "10 seconds". It represents the time to wait before dropping events in case the local queue is full, i.e. already contains queueSize events. This may occur if the remote host is persistently slow accepting events. The default value of this option is 100 milliseconds. |

| remoteHost | String |

The host name of the server. |

| ssl | SSLConfiguration |

Supported only for SSLSocketAppender, this

property provides the SSL configuration that will be used by

the appender, as described in Using SSL.

|

Logging Server Options

The standard Logback Classic distribution includes two options

for servers that can be used to receive logging events from

SocketAppender or SSLSocketAppender.

ServerSocketReceiverand its SSL-enabled counterpartSSLServerSocketReceiverare receiver components which can be configured in the logback.xml configuration file of an application in order receive events from a remote socket appender. See Receivers for configuration details and usage examples.SimpleSocketServerand its SSL-enabled counterpartSimpleSSLSocketServerboth offer an easy-to-use standalone Java application that is designed to be configured and run from your shell's command line interface. These applications simply wait for logging events fromSocketAppenderorSSLSocketAppenderclients. Each received event is logged according to local server policy. Usage examples are given below.

Using SimpleSocketServer

The SimpleSocketServer application takes two command-line

arguments: port and configFile;

where port is the port to listen on and

configFile is a configuration script in XML format.

Assuming you are in the logback-examples/ directory,

start SimpleSocketServer with the following command:

java ch.qos.logback.classic.net.SimpleSocketServer 6000 \ src/main/java/chapters/appenders/socket/server1.xml

where 6000 is the port number to listen on and

server1.xml is a configuration script that adds a

ConsoleAppender and a

RollingFileAppender to the root logger. After you

have started SimpleSocketServer, you can send it log

events from multiple clients using SocketAppender.

The examples associated with this manual include two such clients:

chapters.appenders.SocketClient1 and

chapters.appenders.SocketClient2 Both clients wait for the user

to type a line of text on the console. The text is encapsulated

in a logging event of level debug and then sent to the remote

server. The two clients differ in the configuration of the

SocketAppender. SocketClient1 configures

the appender programmatically while SocketClient2

requires a configuration file.

Assuming SimpleSocketServer is running on the

local host, you connect to it with the following command:

java chapters.appenders.socket.SocketClient1 localhost 6000

Each line that you type should appear on the console of the

SimpleSocketServer launched in the previous step. If

you stop and restart the SimpleSocketServer the

client will transparently reconnect to the new server instance,

although the events generated while disconnected will be simply

(and irrevocably) lost.

Unlike

SocketClient1, the sample application

SocketClient2 does not configure logback by itself.

It requires a configuration file in XML format.

The configuration file client1.xml

shown below creates a SocketAppender

and attaches it to the root logger.

Example: SocketAppender configuration (logback-examples/src/main/resources/chapters/appenders/socket/client1.xml)

<configuration>

<appender name="SOCKET" class="ch.qos.logback.classic.net.SocketAppender">

<remoteHost>${host}</remoteHost>

<port>${port}</port>

<reconnectionDelay>10000</reconnectionDelay>

<includeCallerData>${includeCallerData}</includeCallerData>

</appender>

<root level="DEBUG">

<appender-ref ref="SOCKET" />

</root>

</configuration>Requires a server call.

Requires a server call.

Note that in the above configuration scripts the values for the remoteHost, port and includeCallerData properties are not given directly but as substituted variable keys. The values for the variables can be specified as system properties:

java -Dhost=localhost -Dport=6000 -DincludeCallerData=false \ chapters.appenders.socket.SocketClient2 src/main/java/chapters/appenders/socket/client1.xml

This command should give similar results to the previous

SocketClient1

example.

Allow us to repeat for emphasis that serialization of logging

events is not intrusive. A deserialized event carries the same

information as any other logging event. It can be manipulated as

if it were generated locally; except that serialized logging

events by default do not include caller data. Here is an example

to illustrate the point. First, start

SimpleSocketServer with the following command:

java ch.qos.logback.classic.net.SimpleSocketServer 6000 \ src/main/java/chapters/appenders/socket/server2.xml

The configuration file server2.xml creates a

ConsoleAppender whose layout outputs the caller's file

name and line number along with other information. If you run

SocketClient2 with the configuration file

client1.xml as previously, you will notice that the output

on the server side will contain two question marks between

parentheses instead of the file name and the line number of the

caller:

2006-11-06 17:37:30,968 DEBUG [Thread-0] [?:?] chapters.appenders.socket.SocketClient2 - Hi

The outcome can be easily changed by instructing the

SocketAppender to include caller data by setting the

includeCallerData option to

true. Using the following command will do the trick:

java -Dhost=localhost -Dport=6000 -DincludeCallerData=true \ chapters.appenders.socket.SocketClient2 src/main/java/chapters/appenders/socket/client1.xml

As deserialized events can be handled in the same way as locally generated events, they even can be sent to a second server for further treatment. As an exercise, you may wish to set up two servers where the first server tunnels the events it receives from its clients to a second server.

Using SimpleSSLSocketServer

The SimpleSSLSocketServer requires the same

port and configFile command-line arguments used

by SimpleSocketServer. Additionally, you must provide

the location and password for your logging server's X.509 credential

using system properties specified on the command line.

Assuming you are in the logback-examples/ directory,

start SimpleSSLSocketServer with the following command:

java -Djavax.net.ssl.keyStore=src/main/java/chapters/appenders/socket/ssl/keystore.jks \ -Djavax.net.ssl.keyStorePassword=changeit \ ch.qos.logback.classic.net.SimpleSSLSocketServer 6000 \ src/main/java/chapters/appenders/socket/ssl/server.xml

This example runs SimpleSSLSocketServer using an

X.509 credential that is suitable for testing and experimentation,

only. Before using SimpleSSLSocketServer in a

production setting you should obtain an appropriate X.509 credential

to identify your logging server. See

Using SSL for more details.

Because the server configuration has debug="true"

specified on the root element, you will see in the server's

startup logging the SSL configuration that will be used. This is

useful in validating that local security policies are properly

implemented.

With SimpleSSLSocketServer running, you can connect

to the server using an SSLSocketAppender. The following

example shows the appender configuration needed:

Example: SSLSocketAppender configuration (logback-examples/src/main/resources/chapters/appenders/socket/ssl/client.xml)

<configuration debug="true">

<appender name="SOCKET" class="ch.qos.logback.classic.net.SSLSocketAppender">

<remoteHost>${host}</remoteHost>

<port>${port}</port>

<reconnectionDelay>10000</reconnectionDelay>

<ssl>

<trustStore>

<location>${truststore}</location>

<password>${password}</password>

</trustStore>

</ssl>

</appender>

<root level="DEBUG">

<appender-ref ref="SOCKET" />

</root>

</configuration>Requires a server call. Please wait a few seconds.

Requires a server call.

Note that, just as in the previous example, the values for

remoteHost, port are specified using substituted variable

keys. Additionally, note the presence of the ssl property and its nested trustStore property, which specifies the

location and password of a trust store using substituted

variables. This configuration is necessary because our example

server is using a self-signed certificate. See Using SSL for more information on SSL

configuration properties for SSLSocketAppender.

We can run a client application using this configuration by specifying the substitution variable values on the command line as system properties:

java -Dhost=localhost -Dport=6000 \ -Dtruststore=file:src/main/java/chapters/appenders/socket/ssl/truststore.jks \ -Dpassword=changeit \ chapters.appenders.socket.SocketClient2 src/main/java/chapters/appenders/socket/ssl/client.xml

As in the previous examples, you can type in a message when prompted by the client application, and the message will be delivered to the logging server (now over a secure channel) where it will be displayed on the console.

Note that the truststore property given on the command line specifies a file URL that identifies the location of the trust store. You may also use a classpath URL as described in Using SSL.

As we saw previously at server startup, because the client

configuration has debug="true" specified on the root

element, the client's startup logging includes the details of the

SSL configuration as aid to auditing local policy conformance.

ServerSocketAppender and SSLServerSocketAppender

The SocketAppender component (and its SSL-enabled

counterpart) discussed previously are designed to allow an

application to connect to a remote logging server over the network

for the purpose of delivering logging events to the server. In

some situations, it may be inconvenient or infeasible to have an

application initiate a connection to a remote logging server. For

these situations, Logback offers

ServerSocketAppender.

Instead of initiating a connection to a remote logging server,

ServerSocketAppender passively listens on a TCP socket

awaiting incoming connections from remote clients. Logging events

that are delivered to the appender are distributed to each connected

client. Logging events that occur when no client is connected are

summarily discarded.

In addition to the basic ServerSocketAppender, Logback

offers

SSLServerSocketAppender, which distributes logging events

to each connected client using a secure, encrypted channel. Moreover, the

SSL-enabled appender fully supports mutual certificate-based authentication,

which can be used to ensure that only authorized clients can connect to

the appender to receive logging events.

The approach to encoding logging events for transmission on the wire

is identical to that used by SocketAppender; each event is

a serialized instance of ILoggingEvent. Only the direction

of connection initiation is reversed. While SocketAppender

acts as the active peer in establishing the connection to a logging server,

ServerSocketAppender is passive; it listens for

incoming connections from clients.

The ServerSocketAppender subtypes are intended to be

used exclusively with Logback receiver components. See

Receivers for additional information on

this component type.

The following configuration properties are supported by

ServerSocketAppender:

| Property Name | Type | Description |

|---|---|---|

| address | String |

The local network interface address on which the appender will listen. If this property is not specified, the appender will listen on all network interfaces. |

| includeCallerData | boolean |

If true, the caller data will be available to the remote host. By default no caller data is sent to the client. |

| port | int |

The port number on which the appender will listen. |

| ssl | SSLConfiguration |

Supported only for SSLServerSocketAppender, this

property provides the SSL configuration that will be used by

the appender, as described in Using SSL.

|

The following example illustrates a configuration that uses

ServerSocketAppender:

Example: Basic ServerSocketAppender Configuration (logback-examples/src/main/resources/chapters/appenders/socket/server4.xml)

<configuration debug="true">

<appender name="SERVER"

class="ch.qos.logback.classic.net.server.ServerSocketAppender">

<port>${port}</port>

<includeCallerData>${includeCallerData}</includeCallerData>

</appender>

<root level="debug">

<appender-ref ref="SERVER" />

</root>

</configuration>

Requires a server call. Please wait a few seconds.

Requires a server call.

Note that this configuration differs from previous examples using

SocketAppender only in the class specified for

the appender, and in the absence of the remoteHost

property — this appender waits passively for inbound connections

from remote hosts rather than opening a connection to a remote logging

server.

The following example illustrates a configuration using

SSLServerSocketAppender.

Example: Basic SSLServerSocketAppender Configuration (logback-examples/src/main/resources/chapters/appenders/socket/ssl/server3.xml)

<configuration debug="true">

<appender name="SERVER"

class="ch.qos.logback.classic.net.server.SSLServerSocketAppender">

<port>${port}</port>

<includeCallerData>${includeCallerData}</includeCallerData>

<ssl>

<keyStore>

<location>${keystore}</location>

<password>${password}</password>

</keyStore>

</ssl>

</appender>

<root level="debug">

<appender-ref ref="SERVER" />

</root>

</configuration>

Requires a server call. Please wait a few seconds.

Requires a server call.

The principal differences between this configuration and the

previous configuration is that the appender's class attribute

identifies the SSLServerSocketAppender type, and the

presence of the nested ssl element which

specifies, in this example, configuration of a key store containing

an X.509 credential for the appender. See

Using SSL for information regarding SSL configuration properties.

Because the ServerSocketAppender subtypes are designed

to be used with receiver components, we will defer presenting

illustrative examples to the chapter entitled

Receivers.

SMTPAppender

The SMTPAppender

accumulates logging events in one or more fixed-size buffers and

sends the contents of the appropriate buffer in an email after a

user-specified event occurs. SMTP email transmission (sending) is

performed asynchronously. By default, the email transmission is

triggered by a logging event of level ERROR. Moreover, by default,

a single buffer is used for all events.

The various properties for SMTPAppender are

summarized in the following table.

| Property Name | Type | Description |

|---|---|---|

| smtpHost | String |

The host name of the SMTP server. Defaults to "localhost"

in com.sun.mail.smtp.SMTPTransport if not set. |

| smtpPort | int |

The port where the SMTP server is listening. Defaults to 25. |

| to | String |

The email address of the recipient as a

pattern. The pattern is evaluated anew with the

triggering event as input for each outgoing email. Multiple

recipients can be specified by separating the destination

addresses with commas. Alternatively, multiple recipients can

also be specified by using multiple <to>

elements.

|

| from | String |

The originator of the email messages sent by

SMTPAppender in the usual email

address format. If you wish to include the sender's name,

then use the format

"Adam Smith <smith@moral.org>" so that

the message appears as originating from

"Adam Smith <smith@moral.org>".

|

| subject | String |

The subject of the email. It can be any value accepted as a valid conversion pattern by PatternLayout. Layouts will be discussed in the next chapter. The outgoing email message will have a subject line corresponding to applying the pattern on the logging event that triggered the email message. Assuming the subject option is set to "Log: %logger - %msg" and the triggering event's logger is named "com.foo.Bar", and contains the message "Hello world", then the outgoing email will have the subject line "Log: com.foo.Bar - Hello World". By default, this option is set to "%logger{20} - %m". |

| discriminator | Discriminator |

With the help of a Discriminator,

By specifying a discriminator other than the default one, it is possible to receive email messages containing an events pertaining to a particular user, user session or client IP address. |

| evaluator | IEvaluator |

This option is declared by creating a new

In the absence of this option, Logback ships with several other evaluators, namely |

| cyclicBufferTracker | CyclicBufferTracker

|

As the name indicates, an instance of the

If you don't specify a cyclicBufferTracker, an instance of CyclicBufferTracker will be automatically created. By default, this instance will keep events in a cyclic buffer of size 256. You may change the size with the help of the bufferSize option (see below). |

| username | String | The username value to use during plain user/password authentication. By default, this parameter is null. |

| password | String |

The password value to use for plain user/password authentication. By default, this parameter is null. |

| STARTTLS | boolean |

If this parameter is set to true, then this appender will issue the STARTTLS command (if the server supports it) causing the connection to switch to SSL. Note that the connection is initially non-encrypted. By default, this parameter is set to false. |

| SSL | boolean | If this parameter is set to true, then this appender will open an SSL connection to the server. By default, this parameter is set to false. |

| charsetEncoding | String |

The outgoing email message will be encoded in the designated charset. The default charset encoding is "UTF-8" which works well for most purposes. |

| localhost | String |

In case the hostname of the SMTP client is not properly configured, e.g. if the client hostname is not fully qualified, certain SMTP servers may reject the HELO/EHLO commands sent by the client. To overcome this issue, you may set the value of the localhost property to the fully qualified name of the client host. See also the "mail.smtp.localhost" property in the documentation for the com.sun.mail.smtp package. |

| asynchronousSending | boolean |

This property determines whether email transmission is

done asynchronously or not. By default, the asynchronousSending property is

'true'. However, under certain circumstances asynchronous

sending may be inappropriate. For example if your application

uses SMTPAppender to send alerts in response to a

fatal error, and then exits, the relevant thread may not have

the time to send the alert email. In this case, set asynchronousSending property to 'false'

for synchronous email transmission.

|

| includeCallerData | boolean |

By default, includeCallerData is

set to false. You should set includeCallerData to true if

asynchronousSending is enabled and

you wish to include caller data in the logs. |

| sessionViaJNDI | boolean |

SMTPAppender relies on

javax.mail.Session to send out email messages. By

default, sessionViaJNDI is set to

false so the javax.mail.Session

instance is built by SMTPAppender itself with the

properties specified by the user. If the sessionViaJNDI property is set to

true, the javax.mail.Session object

will be retrieved via JNDI. See also the jndiLocation property.

Retrieving the |

| jndiLocation | String |

The location where the javax.mail.Session is placed in JNDI. By default, jndiLocation is set to "java:comp/env/mail/Session". |

The SMTPAppender keeps only the last 256 logging

events in its cyclic buffer, throwing away older events when its

buffer becomes full. Thus, the number of logging events delivered

in any e-mail sent by SMTPAppender is upper-bounded

by 256. This keeps memory requirements bounded while still

delivering a reasonable amount of application context.

The SMTPAppender relies on the JavaMail API. It

has been tested with JavaMail API version 1.4. The JavaMail API

requires the JavaBeans Activation Framework package. You can

download the JavaMail API and

the JavaBeans

Activation Framework from their respective websites. Make

sure to place these two jar files in the classpath before trying

the following examples.

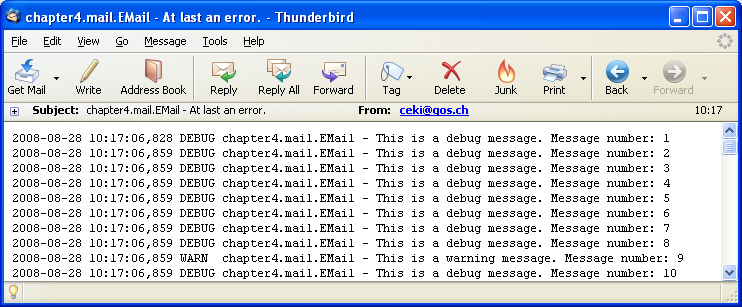

A sample application, chapters.appenders.mail.EMail

generates a number of log messages followed by a single

error message. It takes two parameters. The first parameter is an

integer corresponding to the number of logging events to

generate. The second parameter is the logback configuration

file. The last logging event generated by EMail

application, an ERROR, will trigger the transmission of an email

message.

Here is a sample configuration file intended for the

Email application:

Example: A sample SMTPAppender

configuration

(logback-examples/src/main/resources/chapters/appenders/mail/mail1.xml)

<configuration>

<appender name="EMAIL" class="ch.qos.logback.classic.net.SMTPAppender">

<smtpHost>ADDRESS-OF-YOUR-SMTP-HOST</smtpHost>

<to>EMAIL-DESTINATION</to>

<to>ANOTHER_EMAIL_DESTINATION</to> <!-- additional destinations are possible -->

<from>SENDER-EMAIL</from>

<subject>TESTING: %logger{20} - %m</subject>

<layout class="ch.qos.logback.classic.PatternLayout">

<pattern>%date %-5level %logger{35} - %message%n</pattern>

</layout>

</appender>

<root level="DEBUG">

<appender-ref ref="EMAIL" />

</root>

</configuration>Requires a server call. Please wait a few seconds.

Requires a server call.

Before trying out chapters.appenders.mail.Email

application with the above configuration file, you must set the

smtpHost, to

and from properties to values

appropriate for your environment. Once you have set the correct

values in the configuration file, execute the following command:

The recipient you specified should receive an email message

containing 100 logging events formatted by

PatternLayout The figure below is the resulting email

message as shown by Mozilla Thunderbird.

In the next example configuration file mail2.xml, the values for the smtpHost, to and from properties are determined by variable substitution. Here is the relevant part of mail2.xml.

<appender name="EMAIL" class="ch.qos.logback.classic.net.SMTPAppender">

<smtpHost>${smtpHost}</smtpHost>

<to>${to}</to>

<from>${from}</from>

<layout class="ch.qos.logback.classic.html.HTMLLayout"/>

</appender>You can pass the required parameters on the command line:

java [email protected] [email protected] -DsmtpHost=some_smtp_host \ chapters.appenders.mail.EMail 10000 src/main/java/chapters/appenders/mail/mail2.xml

Be sure to replace with values as appropriate for your environment.

Note that in this latest example, PatternLayout

was replaced by HTMLLayout which formats logs as an

HTML table. You can change the list and order of columns as well

as the CSS of the table. Please refer to HTMLLayout documentation

for further details.

Given that the size of the cyclic buffer is 256, the recipient

should see an email message containing 256 events conveniently

formatted in an HTML table. Note that this run of the

chapters.appenders.mail.Email application generated

10'000 events of which only the last 256 were included in the

outgoing email.

Email clients such as Mozilla Thunderbird, Eudora or MS

Outlook, offer reasonably good CSS support for HTML email.

However, they sometimes automatically downgrade HTML to

plaintext. For example, to view HTML email in Thunderbird, the

"View→Message Body As→Original HTML" option

must be set. Yahoo! Mail's support for HTML email, in particular

its CSS support is very good. Gmail on the other hand, while it

honors the basic HTML table structure, ignores the internal CSS

formatting. Gmail supports inline CSS formatting but since inline

CSS would make the resulting output too voluminous,

HTMLLayout does not use inline CSS.

Custom buffer size

By default, the outgoing message will contain the last 256

messages seen by SMTPAppender. If your heart so

desires, you may set a different buffer size as shown in the next example.

Example: SMTPAppender configuration with a custom buffer size (logback-examples/src/main/resources/chapters/appenders/mail/customBufferSize.xml)

<configuration>

<appender name="EMAIL" class="ch.qos.logback.classic.net.SMTPAppender">

<smtpHost>${smtpHost}</smtpHost>

<to>${to}</to>

<from>${from}</from>

<subject>%logger{20} - %m</subject>

<layout class="ch.qos.logback.classic.html.HTMLLayout"/>

<cyclicBufferTracker class="ch.qos.logback.core.spi.CyclicBufferTracker">

<!-- send just one log entry per email -->

<bufferSize>1</bufferSize>

</cyclicBufferTracker>

</appender>

<root level="DEBUG">

<appender-ref ref="EMAIL" />

</root>

</configuration> Requires a server call. Please wait a few seconds.

Requires a server call.

Triggering event

If the Evaluator property is not set, the

SMTPAppender defaults to an OnErrorEvaluator

instance which triggers email transmission when it encounters an

event of level ERROR. While triggering an outgoing email in

response to an error is relatively reasonable, it is possible to